Recent searches

Search options

#humanvsai

0 posts0 participants0 posts today

Replied in thread

@Michal Bryxí  And while I'm at it, here's a quote-post of my comment in which I review the second AI description.

And while I'm at it, here's a quote-post of my comment in which I review the second AI description.

Jupiter Rowland wrote the following post Sat, 18 May 2024 00:24:46 +0200 It's almost hilarious how clueless the AI was again. And how wrong.

First of all, the roof isn't curved in the traditional sense. The end piece kind of is, but the roof behind it is more complex. Granted, unlike me, the AI can't look behind the roof end, so it doesn't know.

Next, the roof end isn't reflective. It isn't even glossy. And brushed stainless steel shouldn't really reflect anything.

The AI fails to count the columns that hold the roof end, and it claims they're evenly spaced. They're anything but.

There are three letters "M" on the emblem, but none of them is stand-alone.There is visible text on the logo that does provide additional context: "Universal Campus", "patefacio radix" and "MMXI". Maybe LLaVA would have been able to decipher at least the former, had I fed it the image at its original resolution of 2100x1400 pixels instead of the one I've uploaded with a resolution of 800x533 pixels. Decide for yourself which was or would have been cheating.

"Well-maintained lawn". Ha. The lawn is painted on, and the ground is so bumpy that I wouldn't call it well-maintained.

The entrance of the building is visible. In fact, three of the five entrances are. Four if you count the one that can be seen through the glass on the front. And the main entrance is marked with that huge structure around it.

The "few scattered clouds" are mostly one large cloud.

At least LLaVA is still capable of recognising a digital rendering and tells us how. Just you wait until PBR is out, LLaVA.

#Long #LongPost #CWLong #CWLongPost #FediMeta #FediverseMeta #CWFediMeta #CWFediverseMeta #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImageDescriptionMeta #AI #LLaVA

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

Jupiter Rowland wrote the following post Sat, 18 May 2024 00:24:46 +0200 It's almost hilarious how clueless the AI was again. And how wrong.

First of all, the roof isn't curved in the traditional sense. The end piece kind of is, but the roof behind it is more complex. Granted, unlike me, the AI can't look behind the roof end, so it doesn't know.

Next, the roof end isn't reflective. It isn't even glossy. And brushed stainless steel shouldn't really reflect anything.

The AI fails to count the columns that hold the roof end, and it claims they're evenly spaced. They're anything but.

There are three letters "M" on the emblem, but none of them is stand-alone.There is visible text on the logo that does provide additional context: "Universal Campus", "patefacio radix" and "MMXI". Maybe LLaVA would have been able to decipher at least the former, had I fed it the image at its original resolution of 2100x1400 pixels instead of the one I've uploaded with a resolution of 800x533 pixels. Decide for yourself which was or would have been cheating.

"Well-maintained lawn". Ha. The lawn is painted on, and the ground is so bumpy that I wouldn't call it well-maintained.

The entrance of the building is visible. In fact, three of the five entrances are. Four if you count the one that can be seen through the glass on the front. And the main entrance is marked with that huge structure around it.

The "few scattered clouds" are mostly one large cloud.

At least LLaVA is still capable of recognising a digital rendering and tells us how. Just you wait until PBR is out, LLaVA.

#Long #LongPost #CWLong #CWLongPost #FediMeta #FediverseMeta #CWFediMeta #CWFediverseMeta #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImageDescriptionMeta #AI #LLaVA

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

hub.netzgemeinde.euJupiter RowlandAn avatar roaming the decentralised and federated 3-D virtual worlds based on OpenSimulator, a free and open-source server-side re-implementation of Second Life. Mostly talking about OpenSim, sometimes about other virtual worlds, occasionally about the Fediverse beyond Mastodon. No, the Fediverse is not only Mastodon.

If you're looking for real-life people posting about real-life topics, go look somewhere else. This channel is never about real life.

Even if you see me on Mastodon, I'm not on Mastodon myself. I'm on [url=https://hubzilla.org]Hubzilla[/url] which is neither a Mastodon instance nor a Mastodon fork. In fact, it's older and much more powerful than Mastodon. And it has always been connected to Mastodon.

I regularly write posts with way more than 500 characters. If that disturbs you, block me now, but don't complain. I'm not on Mastodon, I don't have a character limit here.

I rather give too many content warnings than too few. But I have absolutely no means of blanking out pictures for Mastodon users.

I always describe my images, no matter how long it takes. My posts with image descriptions tend to be my longest. Don't go looking for my image descriptions in the alt-text; they're always in the post text which is always hidden behind a content warning due to being over 500 characters long.

If you follow me, and I "follow" you back, I don't actually follow you and receive your posts. Unless you've got something to say that's interesting to me within the scope of this channel, or I know you from OpenSim, I'll most likely deny you the permission to send me your posts. I only "follow" you back because Hubzilla requires me to do that to allow you to follow me. But I do let you send me your comments and direct messages. If you boost a lot of uninteresting stuff, I'll block you boosts.

My "birthday" isn't my actual birthday but my rezday. My first avatar has been around since that day.

If you happen to know German, maybe my "homepage" is something for you, a blog which, much like this channel, is about OpenSim and generally virtual worlds.

#[zrl=https://hub.netzgemeinde.eu/search?tag=OpenSim]OpenSim[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=OpenSimulator]OpenSimulator[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=VirtualWorlds]VirtualWorlds[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=Metaverse]Metaverse[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=SocialVR]SocialVR[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=fedi22]fedi22[/zrl]

Replied in thread

@Michal Bryxí  And since you obviously haven't actually read anything I've linked to, here's a quote-post of my comment in which I dissect the first AI description.

And since you obviously haven't actually read anything I've linked to, here's a quote-post of my comment in which I dissect the first AI description.

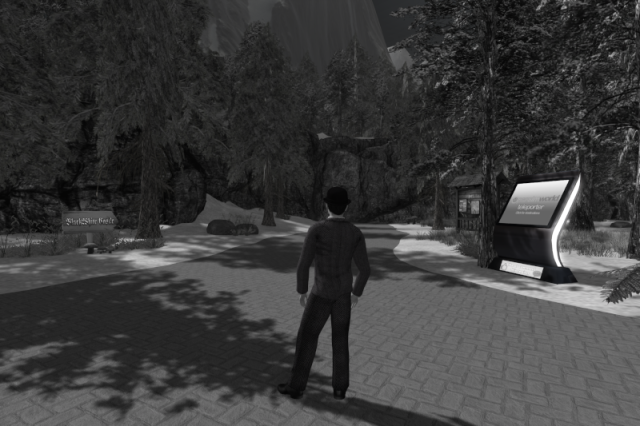

Jupiter Rowland wrote the following post Tue, 05 Mar 2024 20:28:12 +0100 (This is actually a comment. Find another post further up in this thread.)

Now let's pry LLaVA's image description apart, shall we?

Typical for an AI: It starts vague. That's because it isn't really sure what it's looking at.

This is not a video game. It's a 3-D virtual world.

At least, LLaVA didn't take this for a real-life photograph.

It's an avatar, not a character.

This is the first time that the AI is accurate without being vague. However, there could be more details to this.

And I can and do tell the audience in my own image description why my avatar is facing away from the viewer. Oh, and that it's the avatar of the creator of this picture, namely myself.

Nope. Like the AI could see the eyeballs of my avatar from behind. The avatar is actually looking at the cliff in the background.

Also, it's clearly an advertising board.

If I'm generous, I can let this pass as not exactly wrong. Only that there is no dense canopy, and this is not a park.

Nope again. It's actually late morning. The AI doesn't know because it can't tell that the Sun is in the southeast, and because it has got no idea how tall the trees actually are, what with almost all treetops and half the shadow cast by the avatar being out of frame.

In a setting inspired by thrillers from the 1950s and 1960s. You're adorable, LLaVA. Then again, it was quiet because there was no other avatar present.

There's a whole lot in this image that LLaVA didn't mention at all. First of all, the most blatant shortcomings.

First of all, the colours. Or the lack of them. LLaVA doesn't say with a single world that everything is monochrome. What it's even less aware of is that the motive itself is monochrome, i.e. this whole virtual place is actually monochrome, and the avatar is monochrome, too.

Next, what does my avatar look like? Gender? Skin? Hair? Clothes?

Then there's that thing on the right. LLaVA doesn't even mention that this thing is there.

It doesn't mention the sign to the left, it doesn't mention the cliff at the end of the path, it doesn't mention the mountains in the background, and it's unaware of both the bit of sky near the top edge and the large building hidden behind the trees.

And it does not transcribe even one single bit of text in this image.

And now for what I think should really be in the description, but what no AI will ever be able to describe from looking at an image like this one.

A good image description should mention where an image was taken. AIs can currently only tell that when they're fed famous landmarks. AI won't be able to tell from looking at this image that it was taken at the central crossroads at Black White Castle, a sim in the OpenSim-based Pangea Grid anytime soon. And I'm not even talking about explaining OpenSim, grids and all that to people who don't know what it is.

Speaking of which, the object to the right. LLaVA completely ignores it. However, it should be able to not only correctly identify it as an OpenSimWorld beacon, but also describe what it looks like and explain to the reader what an OpenSimWorld beacon is, what OpenSimWorld is etc. because it should know that this can not be expected to be common knowledge. My own description does that in round about 5,000 characters.

And LLaVA should transcribe what's written on the touch screen which it should correctly identify as a touch screen. It should also mention the sign on the left and transcribe what's written on it.

In fact, all text anywhere within the borders of the picture should be transcribed 100% verbatim. Since there's no rule against transcribing text that's so small that it's illegible or that's so tiny that it's practically invisible or that's partially obscured or partially out of frame, a good AI should be capable of transcribing such text 100% verbatim in its entirety as well. Unless text is too small for me to read in-world, I can and do that.

And how about not only knowing that the advertising board is an advertising board, but also mentioning and describing what's on it? Technically speaking, there's actually a lot of text on that board, and in order to transcribe it, its context needs to be described. That is, I must admit I was sloppy myself and omitted a whole lot of transcriptions in my own description.

Still, AI has a very very long way to go. And it will never fully get there.

#Long #LongPost #CWLong #CWLongPost #AltText #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImageDescriptionMeta #AI #LLaVA

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

Jupiter Rowland wrote the following post Tue, 05 Mar 2024 20:28:12 +0100 (This is actually a comment. Find another post further up in this thread.)

Now let's pry LLaVA's image description apart, shall we?

The image appears to be a 3D rendering or a screenshot from a video game or a virtual environment.

Typical for an AI: It starts vague. That's because it isn't really sure what it's looking at.

This is not a video game. It's a 3-D virtual world.

At least, LLaVA didn't take this for a real-life photograph.

It shows a character

It's an avatar, not a character.

standing on a paved path with a brick-like texture.

This is the first time that the AI is accurate without being vague. However, there could be more details to this.

The character is facing away from the viewer,

And I can and do tell the audience in my own image description why my avatar is facing away from the viewer. Oh, and that it's the avatar of the creator of this picture, namely myself.

looking towards a sign or information board on the right side of the image.

Nope. Like the AI could see the eyeballs of my avatar from behind. The avatar is actually looking at the cliff in the background.

Also, it's clearly an advertising board.

The environment is forested with tall trees and a dense canopy, suggesting a natural, possibly park-like setting.

If I'm generous, I can let this pass as not exactly wrong. Only that there is no dense canopy, and this is not a park.

The lighting is subdued, with shadows cast by the trees, indicating either early morning or late afternoon.

Nope again. It's actually late morning. The AI doesn't know because it can't tell that the Sun is in the southeast, and because it has got no idea how tall the trees actually are, what with almost all treetops and half the shadow cast by the avatar being out of frame.

The overall atmosphere is calm and serene.

In a setting inspired by thrillers from the 1950s and 1960s. You're adorable, LLaVA. Then again, it was quiet because there was no other avatar present.

There's a whole lot in this image that LLaVA didn't mention at all. First of all, the most blatant shortcomings.

First of all, the colours. Or the lack of them. LLaVA doesn't say with a single world that everything is monochrome. What it's even less aware of is that the motive itself is monochrome, i.e. this whole virtual place is actually monochrome, and the avatar is monochrome, too.

Next, what does my avatar look like? Gender? Skin? Hair? Clothes?

Then there's that thing on the right. LLaVA doesn't even mention that this thing is there.

It doesn't mention the sign to the left, it doesn't mention the cliff at the end of the path, it doesn't mention the mountains in the background, and it's unaware of both the bit of sky near the top edge and the large building hidden behind the trees.

And it does not transcribe even one single bit of text in this image.

And now for what I think should really be in the description, but what no AI will ever be able to describe from looking at an image like this one.

A good image description should mention where an image was taken. AIs can currently only tell that when they're fed famous landmarks. AI won't be able to tell from looking at this image that it was taken at the central crossroads at Black White Castle, a sim in the OpenSim-based Pangea Grid anytime soon. And I'm not even talking about explaining OpenSim, grids and all that to people who don't know what it is.

Speaking of which, the object to the right. LLaVA completely ignores it. However, it should be able to not only correctly identify it as an OpenSimWorld beacon, but also describe what it looks like and explain to the reader what an OpenSimWorld beacon is, what OpenSimWorld is etc. because it should know that this can not be expected to be common knowledge. My own description does that in round about 5,000 characters.

And LLaVA should transcribe what's written on the touch screen which it should correctly identify as a touch screen. It should also mention the sign on the left and transcribe what's written on it.

In fact, all text anywhere within the borders of the picture should be transcribed 100% verbatim. Since there's no rule against transcribing text that's so small that it's illegible or that's so tiny that it's practically invisible or that's partially obscured or partially out of frame, a good AI should be capable of transcribing such text 100% verbatim in its entirety as well. Unless text is too small for me to read in-world, I can and do that.

And how about not only knowing that the advertising board is an advertising board, but also mentioning and describing what's on it? Technically speaking, there's actually a lot of text on that board, and in order to transcribe it, its context needs to be described. That is, I must admit I was sloppy myself and omitted a whole lot of transcriptions in my own description.

Still, AI has a very very long way to go. And it will never fully get there.

#Long #LongPost #CWLong #CWLongPost #AltText #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImageDescriptionMeta #AI #LLaVA

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

hub.netzgemeinde.euJupiter RowlandAn avatar roaming the decentralised and federated 3-D virtual worlds based on OpenSimulator, a free and open-source server-side re-implementation of Second Life. Mostly talking about OpenSim, sometimes about other virtual worlds, occasionally about the Fediverse beyond Mastodon. No, the Fediverse is not only Mastodon.

If you're looking for real-life people posting about real-life topics, go look somewhere else. This channel is never about real life.

Even if you see me on Mastodon, I'm not on Mastodon myself. I'm on [url=https://hubzilla.org]Hubzilla[/url] which is neither a Mastodon instance nor a Mastodon fork. In fact, it's older and much more powerful than Mastodon. And it has always been connected to Mastodon.

I regularly write posts with way more than 500 characters. If that disturbs you, block me now, but don't complain. I'm not on Mastodon, I don't have a character limit here.

I rather give too many content warnings than too few. But I have absolutely no means of blanking out pictures for Mastodon users.

I always describe my images, no matter how long it takes. My posts with image descriptions tend to be my longest. Don't go looking for my image descriptions in the alt-text; they're always in the post text which is always hidden behind a content warning due to being over 500 characters long.

If you follow me, and I "follow" you back, I don't actually follow you and receive your posts. Unless you've got something to say that's interesting to me within the scope of this channel, or I know you from OpenSim, I'll most likely deny you the permission to send me your posts. I only "follow" you back because Hubzilla requires me to do that to allow you to follow me. But I do let you send me your comments and direct messages. If you boost a lot of uninteresting stuff, I'll block you boosts.

My "birthday" isn't my actual birthday but my rezday. My first avatar has been around since that day.

If you happen to know German, maybe my "homepage" is something for you, a blog which, much like this channel, is about OpenSim and generally virtual worlds.

#[zrl=https://hub.netzgemeinde.eu/search?tag=OpenSim]OpenSim[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=OpenSimulator]OpenSimulator[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=VirtualWorlds]VirtualWorlds[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=Metaverse]Metaverse[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=SocialVR]SocialVR[/zrl] #[zrl=https://hub.netzgemeinde.eu/search?tag=fedi22]fedi22[/zrl]

Replied in thread

@Michal Bryxí

The context matters. A whole lot.

A simple real-life cat photograph can be described in a few hundred characters, and everyone knows what it's all about. It doesn't need much visual description because it's mainly only the cat that matters. Just about everyone knows what real-life cats generally look like, except from the ways they differ from one another. Even people born 100% blind should have a rough enough idea what a cat is and what it looks like from a) being told it if they inquire and b) touching and petting a few cats.

Thus, most elements of a real-life cat photograph can safely be assumed to be common knowledge. They don't require description, and they don't require explanation because everyone should know what a cat is.

Now, let's take the image which LLaVA has described in 558 characters, and which I've previously described in 25,271 characters.

For one, it doesn't focus on anything. It shows an entire scene. If the visual description has to include what's important, it has to include everything in the image because everything in the image is important just the same.

Besides, it's a picture from a 3-D virtual world. Not from the real world. People don't know anything about this kind of 3-D virtual worlds in general, and they don't know anything about this place in particular. In this picture, nothing can safely be assumed to be common knowledge. For blind or visually-impaired users even less.

People may want to know where this image was made. AI won't be able to figure that out. AI can't examine that picture and immediately and with absolute certainty recognise that it was created on a sim called Black-White Castle on an OpenSim grid named Pangea Grid, especially seeing as that place was only a few days old when I was there. LLaVA wasn't even sure if it's a video game or a virtual world. So AI won't be able to tell people.

AI doesn't know either whether or not any of the location information can be considered common knowledge and therefore necessarily to explain so humans will understand it.

I, the human describer, on the other hand, can tell people where exactly this image was made. And I can explain it to them in such a way that they'll understand it with zero prior knowledge about the matter.

Next point: text transcripts. LLaVA didn't even notice that there is text in the image, much less transcribe it. Not transcribing every bit of text in an image is sloppy; not transcribing any text in an image is ableist.

No other AI will even be able to transcribe the text in this image, however. That's because no AI can read any of it. It's all too small and, on top of that, too low-contrast for reliable OCR. All that AI has is the image I've posted at a resolution of 800x533 pixels.

I myself can see the scenery at nigh-infinite resolution by going there. No AI can do that, and no LLM AI will ever be able to do that. And so I can read and transcribe all text in the image 100% verbatim with 100% accuracy.

However, text transcripts require some room in the description, also because they additionally require descriptions of where the text is.

I win again. And so does the long, detailed description.

I'm not sure if this is typical Mastodon behaviour because it's impossible for Mastodon users to imagine that images can be described elsewhere than in the alt-text (they can, and I have), or if it's intentional trolling.

The 25,271 characters did not go into the alt-text! They went into the post.

I can put so many characters into a post. I'm not on Mastodon. I'm on Hubzilla which has never had and still doesn't have any character limits.

In the alt-text, there's a separate, shorter, still self-researched and hand-written image description to satisfy those who absolutely demand there be an image description in the alt-text.

25,271 characters in alt-text would cause Mastodon to cut 23,771 characters off and throw them away.

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

Without any context

The context matters. A whole lot.

A simple real-life cat photograph can be described in a few hundred characters, and everyone knows what it's all about. It doesn't need much visual description because it's mainly only the cat that matters. Just about everyone knows what real-life cats generally look like, except from the ways they differ from one another. Even people born 100% blind should have a rough enough idea what a cat is and what it looks like from a) being told it if they inquire and b) touching and petting a few cats.

Thus, most elements of a real-life cat photograph can safely be assumed to be common knowledge. They don't require description, and they don't require explanation because everyone should know what a cat is.

Now, let's take the image which LLaVA has described in 558 characters, and which I've previously described in 25,271 characters.

For one, it doesn't focus on anything. It shows an entire scene. If the visual description has to include what's important, it has to include everything in the image because everything in the image is important just the same.

Besides, it's a picture from a 3-D virtual world. Not from the real world. People don't know anything about this kind of 3-D virtual worlds in general, and they don't know anything about this place in particular. In this picture, nothing can safely be assumed to be common knowledge. For blind or visually-impaired users even less.

People may want to know where this image was made. AI won't be able to figure that out. AI can't examine that picture and immediately and with absolute certainty recognise that it was created on a sim called Black-White Castle on an OpenSim grid named Pangea Grid, especially seeing as that place was only a few days old when I was there. LLaVA wasn't even sure if it's a video game or a virtual world. So AI won't be able to tell people.

AI doesn't know either whether or not any of the location information can be considered common knowledge and therefore necessarily to explain so humans will understand it.

I, the human describer, on the other hand, can tell people where exactly this image was made. And I can explain it to them in such a way that they'll understand it with zero prior knowledge about the matter.

Next point: text transcripts. LLaVA didn't even notice that there is text in the image, much less transcribe it. Not transcribing every bit of text in an image is sloppy; not transcribing any text in an image is ableist.

No other AI will even be able to transcribe the text in this image, however. That's because no AI can read any of it. It's all too small and, on top of that, too low-contrast for reliable OCR. All that AI has is the image I've posted at a resolution of 800x533 pixels.

I myself can see the scenery at nigh-infinite resolution by going there. No AI can do that, and no LLM AI will ever be able to do that. And so I can read and transcribe all text in the image 100% verbatim with 100% accuracy.

However, text transcripts require some room in the description, also because they additionally require descriptions of where the text is.

I win again. And so does the long, detailed description.

Would you rather have alt text that is:

I'm not sure if this is typical Mastodon behaviour because it's impossible for Mastodon users to imagine that images can be described elsewhere than in the alt-text (they can, and I have), or if it's intentional trolling.

The 25,271 characters did not go into the alt-text! They went into the post.

I can put so many characters into a post. I'm not on Mastodon. I'm on Hubzilla which has never had and still doesn't have any character limits.

In the alt-text, there's a separate, shorter, still self-researched and hand-written image description to satisfy those who absolutely demand there be an image description in the alt-text.

25,271 characters in alt-text would cause Mastodon to cut 23,771 characters off and throw them away.

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

hub.netzgemeinde.euNetzgemeinde/Hubzilla

Replied in thread

@Michal Bryxí

Won't happen.

Maybe AI sometimes happens to be as good as humans when it comes to describing generic, everyday images that are easy to describe. By the way, I keep seeing AI miserably failing to describe cat photos.

But when it comes to extremely obscure niche content, AI can only produce useless train wrecks. And this will never change. When it comes to extremely obscure niche content, AI not only requires full, super-detailed, up-to-date-by-the-minute knowledge of all aspects of the topic, down to niches within niches within the niche, but it must be able to explain it, and it must know that and inhowfar it's necessary to explain it.

I've pitted LLaVA against my own hand-written image descriptions. Twice. Not simply against the short image descriptions in my alt-texts, but against the full, long, detailed, explanatory image descriptions in the posts.

And LLaVA failed so, so miserably. What little it described, it often got it wrong. More importantly, LLaVA's descriptions were nowhere near explanatory enough for a casual audience with no prior knowledge in the topic to really understand the image.

500+ characters generated by LLaVA in five seconds are no match against my own 25,000+ characters that took me eight hours to research and write.

1,100+ characters generated by LLaVA in 30 seconds are no match against my own 60,000+ characters that took me two full days to research and write.

When I describe my images, I put abilities to use that AI will never have. Including, but not limited to the ability to join and navigate 3-D virtual worlds. Not to mention that an AI would have to be able to deduce from a picture where exactly a virtual world image was created, and how to get there.

So no, ChatGPT won't write circles around me by next year. Or ever. Neither will any other AI out there.

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

Prediction: Alt text will be generated by AI directly on the consumer's side so that *they* can tell what detail, information density, parts of the picture are important for *them*. And pre-written alt text will be frowned upon.

Won't happen.

Maybe AI sometimes happens to be as good as humans when it comes to describing generic, everyday images that are easy to describe. By the way, I keep seeing AI miserably failing to describe cat photos.

But when it comes to extremely obscure niche content, AI can only produce useless train wrecks. And this will never change. When it comes to extremely obscure niche content, AI not only requires full, super-detailed, up-to-date-by-the-minute knowledge of all aspects of the topic, down to niches within niches within the niche, but it must be able to explain it, and it must know that and inhowfar it's necessary to explain it.

I've pitted LLaVA against my own hand-written image descriptions. Twice. Not simply against the short image descriptions in my alt-texts, but against the full, long, detailed, explanatory image descriptions in the posts.

And LLaVA failed so, so miserably. What little it described, it often got it wrong. More importantly, LLaVA's descriptions were nowhere near explanatory enough for a casual audience with no prior knowledge in the topic to really understand the image.

500+ characters generated by LLaVA in five seconds are no match against my own 25,000+ characters that took me eight hours to research and write.

1,100+ characters generated by LLaVA in 30 seconds are no match against my own 60,000+ characters that took me two full days to research and write.

When I describe my images, I put abilities to use that AI will never have. Including, but not limited to the ability to join and navigate 3-D virtual worlds. Not to mention that an AI would have to be able to deduce from a picture where exactly a virtual world image was created, and how to get there.

So no, ChatGPT won't write circles around me by next year. Or ever. Neither will any other AI out there.

#Long #LongPost #CWLong #CWLongPost #VirtualWorlds #AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImagDescriptionMeta #LLaVA #AI #AIVsHuman #HumanVsAI

llava-vl.github.ioLLaVAVisual Instruction Tuning

Replied in thread

@Stefan Bohacek Well, I already do, and I guess you know by now.

At least I don't think my image descriptions are "basic". They may be "plain" and not "inspiring", but if "basic" with no drivel in-between already amounts to anything between 25,000 and over 60,000 characters, should I really decorate my image descriptions and inflate them further?

#AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImageDescriptionMeta #AI #AIVsHuman #HumanVsAI

At least I don't think my image descriptions are "basic". They may be "plain" and not "inspiring", but if "basic" with no drivel in-between already amounts to anything between 25,000 and over 60,000 characters, should I really decorate my image descriptions and inflate them further?

#AltText #AltTextMeta #CWAltTextMeta #ImageDescription #ImageDescriptions #ImageDescriptionMeta #CWImageDescriptionMeta #AI #AIVsHuman #HumanVsAI

hub.netzgemeinde.euNetzgemeinde/Hubzilla

Have you ever taken part in a translation slam? (I haven't.) This one's fascinating, though:

- Concept: 2 human translators vs. MT/AI (against the clock).

- Subjects: opera aria, fiction & an experimental novel.

- Winners: you may be surprised.

Who's your money on?

@GI_weltweit(Wo)man versus machineIf you have ever been to a translation slam – where two translators compare their versions of the same text in front of a live audience – you will know that it’s an event format based on an anxiety dream. Having your work scrutinised and weaknesses pored over in public is a scary prospect. Congratulations to the Goethe-Institut London, then, for finding a way to make the experience still more terrifying for the people on stage: by adding a third slammer with the ability to translate instantly, and with an inhumanly large corpus of linguistic knowledge at their disposal. Oh, and you don’t get to prepare your translations in advance – no, you’ll have a series of previously unseen texts revealed to you live on stage, and be given just a few minutes to wrestle them into English, while your computer screen is projected behind you for the audience to observe your every decision.<br />