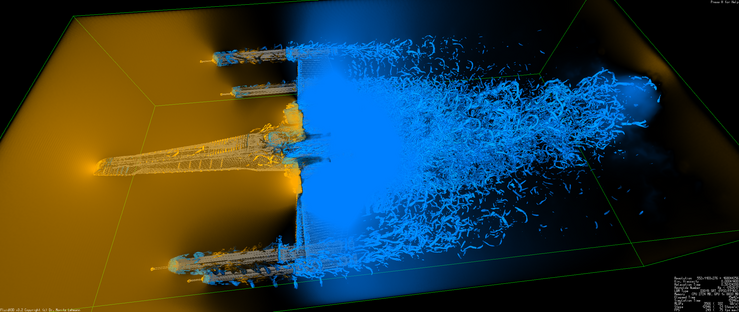

Have you ever programmed a human computer? Having 30 people walking around the room to exchange information between RAM addresses and CPU registers, and human CPUs execute operations on the clock is a very special experience*.

This week I learned more than in a ~year of self-study, thanks to the 16th Advanced Scientific Programming in #Python https://aspp.school

We covered version control, packaging, testing, debugging, computer architecture, some #numpy and #pandas -fu, programming patterns aka what goes into a class and what doesn't, big-O to understand the scaling of various operations and how to find the fastest one for the given data type and size, and an intro to #multithreading and #multiprocessing

A personal highlight for me was pair programming. I never thought writing code in with a buddy would be so much fun, but I learned a lot from my buddies and now I don't want to go back to writing code alone

Very indebted to the teachers and organizers; https://aspp.school/wiki/faculty if you ever meet one of those people, please buy them a drink for what they have done for a better code karma state in the universe

*our human computer didn't manage to execute the simplest sorting algorithm and the CPUs started to sweat; we experienced what happens when the code is ambiguous and imprecise