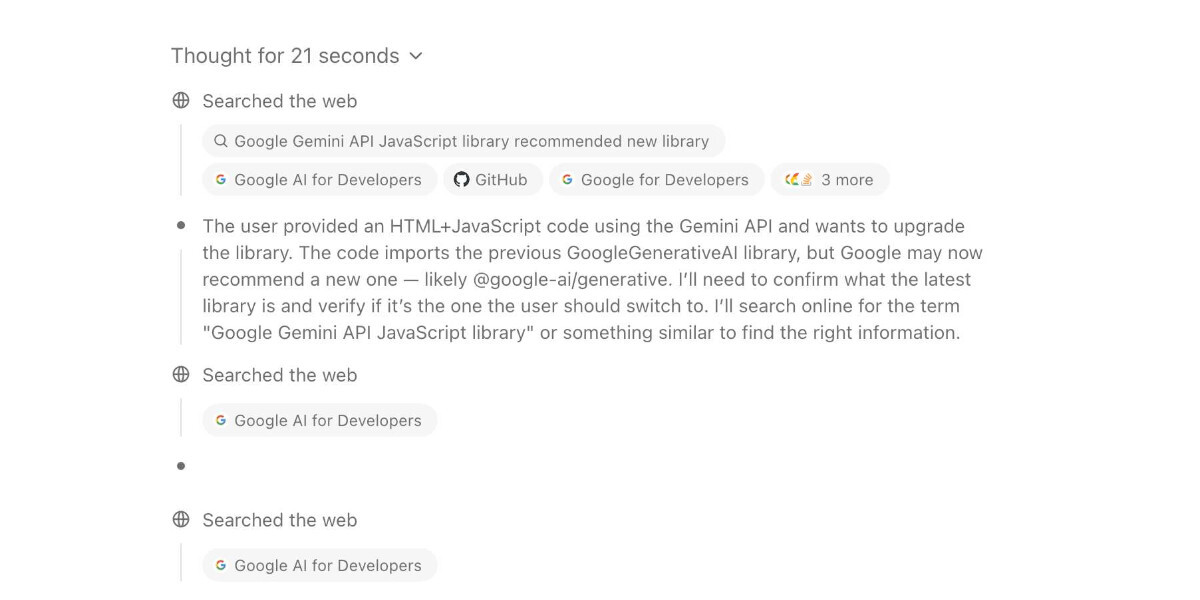

"For the past two and a half years the feature I’ve most wanted from LLMs is the ability to take on search-based research tasks on my behalf. We saw the first glimpses of this back in early 2023, with Perplexity (first launched December 2022, first prompt leak in January 2023) and then the GPT-4 powered Microsoft Bing (which launched/cratered spectacularly in February 2023). Since then a whole bunch of people have taken a swing at this problem, most notably Google Gemini and ChatGPT Search.

Those 2023-era versions were promising but very disappointing. They had a strong tendency to hallucinate details that weren’t present in the search results, to the point that you couldn’t trust anything they told you.

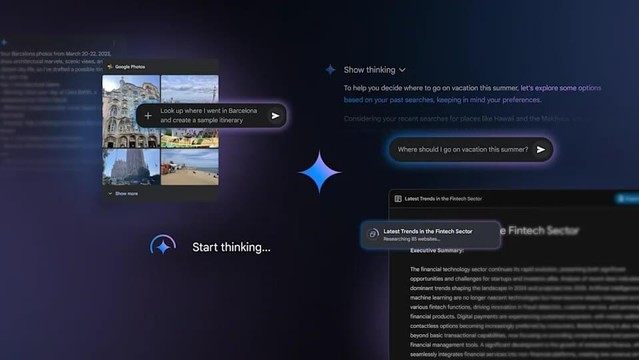

In this first half of 2025 I think these systems have finally crossed the line into being genuinely useful."